DataSetInfo : [dataset] : Added class "Signal"

: Add Tree sgn of type Signal with 2000 events

DataSetInfo : [dataset] : Added class "Background"

: Add Tree bkg of type Background with 2000 events

Factory : Booking method: ␛[1mTMVA_LSTM␛[0m

:

: Parsing option string:

: ... "!H:V:ErrorStrategy=CROSSENTROPY:VarTransform=None:WeightInitialization=XAVIERUNIFORM:ValidationSize=0.2:RandomSeed=1234:InputLayout=10|30:Layout=LSTM|10|30|10|0|1,RESHAPE|FLAT,DENSE|64|TANH,LINEAR:TrainingStrategy=LearningRate=1e-3,Momentum=0.0,Repetitions=1,ConvergenceSteps=5,BatchSize=100,TestRepetitions=1,WeightDecay=1e-2,Regularization=None,MaxEpochs=10Optimizer=ADAM,DropConfig=0.0+0.+0.+0.:Architecture=CPU"

: The following options are set:

: - By User:

: <none>

: - Default:

: Boost_num: "0" [Number of times the classifier will be boosted]

: Parsing option string:

: ... "!H:V:ErrorStrategy=CROSSENTROPY:VarTransform=None:WeightInitialization=XAVIERUNIFORM:ValidationSize=0.2:RandomSeed=1234:InputLayout=10|30:Layout=LSTM|10|30|10|0|1,RESHAPE|FLAT,DENSE|64|TANH,LINEAR:TrainingStrategy=LearningRate=1e-3,Momentum=0.0,Repetitions=1,ConvergenceSteps=5,BatchSize=100,TestRepetitions=1,WeightDecay=1e-2,Regularization=None,MaxEpochs=10Optimizer=ADAM,DropConfig=0.0+0.+0.+0.:Architecture=CPU"

: The following options are set:

: - By User:

: V: "True" [Verbose output (short form of "VerbosityLevel" below - overrides the latter one)]

: VarTransform: "None" [List of variable transformations performed before training, e.g., "D_Background,P_Signal,G,N_AllClasses" for: "Decorrelation, PCA-transformation, Gaussianisation, Normalisation, each for the given class of events ('AllClasses' denotes all events of all classes, if no class indication is given, 'All' is assumed)"]

: H: "False" [Print method-specific help message]

: InputLayout: "10|30" [The Layout of the input]

: Layout: "LSTM|10|30|10|0|1,RESHAPE|FLAT,DENSE|64|TANH,LINEAR" [Layout of the network.]

: ErrorStrategy: "CROSSENTROPY" [Loss function: Mean squared error (regression) or cross entropy (binary classification).]

: WeightInitialization: "XAVIERUNIFORM" [Weight initialization strategy]

: RandomSeed: "1234" [Random seed used for weight initialization and batch shuffling]

: ValidationSize: "0.2" [Part of the training data to use for validation. Specify as 0.2 or 20% to use a fifth of the data set as validation set. Specify as 100 to use exactly 100 events. (Default: 20%)]

: Architecture: "CPU" [Which architecture to perform the training on.]

: TrainingStrategy: "LearningRate=1e-3,Momentum=0.0,Repetitions=1,ConvergenceSteps=5,BatchSize=100,TestRepetitions=1,WeightDecay=1e-2,Regularization=None,MaxEpochs=10Optimizer=ADAM,DropConfig=0.0+0.+0.+0." [Defines the training strategies.]

: - Default:

: VerbosityLevel: "Default" [Verbosity level]

: CreateMVAPdfs: "False" [Create PDFs for classifier outputs (signal and background)]

: IgnoreNegWeightsInTraining: "False" [Events with negative weights are ignored in the training (but are included for testing and performance evaluation)]

: BatchLayout: "0|0|0" [The Layout of the batch]

: Will now use the CPU architecture with BLAS and IMT support !

Factory : Booking method: ␛[1mTMVA_DNN␛[0m

:

: Parsing option string:

: ... "!H:V:ErrorStrategy=CROSSENTROPY:VarTransform=None:WeightInitialization=XAVIER:RandomSeed=0:InputLayout=1|1|300:Layout=DENSE|64|TANH,DENSE|TANH|64,DENSE|TANH|64,LINEAR:TrainingStrategy=LearningRate=1e-3,Momentum=0.0,Repetitions=1,ConvergenceSteps=10,BatchSize=256,TestRepetitions=1,WeightDecay=1e-4,Regularization=None,MaxEpochs=20DropConfig=0.0+0.+0.+0.,Optimizer=ADAM:CPU"

: The following options are set:

: - By User:

: <none>

: - Default:

: Boost_num: "0" [Number of times the classifier will be boosted]

: Parsing option string:

: ... "!H:V:ErrorStrategy=CROSSENTROPY:VarTransform=None:WeightInitialization=XAVIER:RandomSeed=0:InputLayout=1|1|300:Layout=DENSE|64|TANH,DENSE|TANH|64,DENSE|TANH|64,LINEAR:TrainingStrategy=LearningRate=1e-3,Momentum=0.0,Repetitions=1,ConvergenceSteps=10,BatchSize=256,TestRepetitions=1,WeightDecay=1e-4,Regularization=None,MaxEpochs=20DropConfig=0.0+0.+0.+0.,Optimizer=ADAM:CPU"

: The following options are set:

: - By User:

: V: "True" [Verbose output (short form of "VerbosityLevel" below - overrides the latter one)]

: VarTransform: "None" [List of variable transformations performed before training, e.g., "D_Background,P_Signal,G,N_AllClasses" for: "Decorrelation, PCA-transformation, Gaussianisation, Normalisation, each for the given class of events ('AllClasses' denotes all events of all classes, if no class indication is given, 'All' is assumed)"]

: H: "False" [Print method-specific help message]

: InputLayout: "1|1|300" [The Layout of the input]

: Layout: "DENSE|64|TANH,DENSE|TANH|64,DENSE|TANH|64,LINEAR" [Layout of the network.]

: ErrorStrategy: "CROSSENTROPY" [Loss function: Mean squared error (regression) or cross entropy (binary classification).]

: WeightInitialization: "XAVIER" [Weight initialization strategy]

: RandomSeed: "0" [Random seed used for weight initialization and batch shuffling]

: Architecture: "CPU" [Which architecture to perform the training on.]

: TrainingStrategy: "LearningRate=1e-3,Momentum=0.0,Repetitions=1,ConvergenceSteps=10,BatchSize=256,TestRepetitions=1,WeightDecay=1e-4,Regularization=None,MaxEpochs=20DropConfig=0.0+0.+0.+0.,Optimizer=ADAM" [Defines the training strategies.]

: - Default:

: VerbosityLevel: "Default" [Verbosity level]

: CreateMVAPdfs: "False" [Create PDFs for classifier outputs (signal and background)]

: IgnoreNegWeightsInTraining: "False" [Events with negative weights are ignored in the training (but are included for testing and performance evaluation)]

: BatchLayout: "0|0|0" [The Layout of the batch]

: ValidationSize: "20%" [Part of the training data to use for validation. Specify as 0.2 or 20% to use a fifth of the data set as validation set. Specify as 100 to use exactly 100 events. (Default: 20%)]

: Will now use the CPU architecture with BLAS and IMT support !

Running with nthreads = 4

--- RNNClassification : Using input file: time_data_t10_d30.root

number of variables is 300

vars_time0[0]

vars_time0[1]

vars_time0[2]

vars_time0[3]

vars_time0[4]

vars_time0[5]

vars_time0[6]

vars_time0[7]

vars_time0[8]

vars_time0[9]

vars_time0[10]

vars_time0[11]

vars_time0[12]

vars_time0[13]

vars_time0[14]

vars_time0[15]

vars_time0[16]

vars_time0[17]

vars_time0[18]

vars_time0[19]

vars_time0[20]

vars_time0[21]

vars_time0[22]

vars_time0[23]

vars_time0[24]

vars_time0[25]

vars_time0[26]

vars_time0[27]

vars_time0[28]

vars_time0[29]

vars_time1[0]

vars_time1[1]

vars_time1[2]

vars_time1[3]

vars_time1[4]

vars_time1[5]

vars_time1[6]

vars_time1[7]

vars_time1[8]

vars_time1[9]

vars_time1[10]

vars_time1[11]

vars_time1[12]

vars_time1[13]

vars_time1[14]

vars_time1[15]

vars_time1[16]

vars_time1[17]

vars_time1[18]

vars_time1[19]

vars_time1[20]

vars_time1[21]

vars_time1[22]

vars_time1[23]

vars_time1[24]

vars_time1[25]

vars_time1[26]

vars_time1[27]

vars_time1[28]

vars_time1[29]

vars_time2[0]

vars_time2[1]

vars_time2[2]

vars_time2[3]

vars_time2[4]

vars_time2[5]

vars_time2[6]

vars_time2[7]

vars_time2[8]

vars_time2[9]

vars_time2[10]

vars_time2[11]

vars_time2[12]

vars_time2[13]

vars_time2[14]

vars_time2[15]

vars_time2[16]

vars_time2[17]

vars_time2[18]

vars_time2[19]

vars_time2[20]

vars_time2[21]

vars_time2[22]

vars_time2[23]

vars_time2[24]

vars_time2[25]

vars_time2[26]

vars_time2[27]

vars_time2[28]

vars_time2[29]

vars_time3[0]

vars_time3[1]

vars_time3[2]

vars_time3[3]

vars_time3[4]

vars_time3[5]

vars_time3[6]

vars_time3[7]

vars_time3[8]

vars_time3[9]

vars_time3[10]

vars_time3[11]

vars_time3[12]

vars_time3[13]

vars_time3[14]

vars_time3[15]

vars_time3[16]

vars_time3[17]

vars_time3[18]

vars_time3[19]

vars_time3[20]

vars_time3[21]

vars_time3[22]

vars_time3[23]

vars_time3[24]

vars_time3[25]

vars_time3[26]

vars_time3[27]

vars_time3[28]

vars_time3[29]

vars_time4[0]

vars_time4[1]

vars_time4[2]

vars_time4[3]

vars_time4[4]

vars_time4[5]

vars_time4[6]

vars_time4[7]

vars_time4[8]

vars_time4[9]

vars_time4[10]

vars_time4[11]

vars_time4[12]

vars_time4[13]

vars_time4[14]

vars_time4[15]

vars_time4[16]

vars_time4[17]

vars_time4[18]

vars_time4[19]

vars_time4[20]

vars_time4[21]

vars_time4[22]

vars_time4[23]

vars_time4[24]

vars_time4[25]

vars_time4[26]

vars_time4[27]

vars_time4[28]

vars_time4[29]

vars_time5[0]

vars_time5[1]

vars_time5[2]

vars_time5[3]

vars_time5[4]

vars_time5[5]

vars_time5[6]

vars_time5[7]

vars_time5[8]

vars_time5[9]

vars_time5[10]

vars_time5[11]

vars_time5[12]

vars_time5[13]

vars_time5[14]

vars_time5[15]

vars_time5[16]

vars_time5[17]

vars_time5[18]

vars_time5[19]

vars_time5[20]

vars_time5[21]

vars_time5[22]

vars_time5[23]

vars_time5[24]

vars_time5[25]

vars_time5[26]

vars_time5[27]

vars_time5[28]

vars_time5[29]

vars_time6[0]

vars_time6[1]

vars_time6[2]

vars_time6[3]

vars_time6[4]

vars_time6[5]

vars_time6[6]

vars_time6[7]

vars_time6[8]

vars_time6[9]

vars_time6[10]

vars_time6[11]

vars_time6[12]

vars_time6[13]

vars_time6[14]

vars_time6[15]

vars_time6[16]

vars_time6[17]

vars_time6[18]

vars_time6[19]

vars_time6[20]

vars_time6[21]

vars_time6[22]

vars_time6[23]

vars_time6[24]

vars_time6[25]

vars_time6[26]

vars_time6[27]

vars_time6[28]

vars_time6[29]

vars_time7[0]

vars_time7[1]

vars_time7[2]

vars_time7[3]

vars_time7[4]

vars_time7[5]

vars_time7[6]

vars_time7[7]

vars_time7[8]

vars_time7[9]

vars_time7[10]

vars_time7[11]

vars_time7[12]

vars_time7[13]

vars_time7[14]

vars_time7[15]

vars_time7[16]

vars_time7[17]

vars_time7[18]

vars_time7[19]

vars_time7[20]

vars_time7[21]

vars_time7[22]

vars_time7[23]

vars_time7[24]

vars_time7[25]

vars_time7[26]

vars_time7[27]

vars_time7[28]

vars_time7[29]

vars_time8[0]

vars_time8[1]

vars_time8[2]

vars_time8[3]

vars_time8[4]

vars_time8[5]

vars_time8[6]

vars_time8[7]

vars_time8[8]

vars_time8[9]

vars_time8[10]

vars_time8[11]

vars_time8[12]

vars_time8[13]

vars_time8[14]

vars_time8[15]

vars_time8[16]

vars_time8[17]

vars_time8[18]

vars_time8[19]

vars_time8[20]

vars_time8[21]

vars_time8[22]

vars_time8[23]

vars_time8[24]

vars_time8[25]

vars_time8[26]

vars_time8[27]

vars_time8[28]

vars_time8[29]

vars_time9[0]

vars_time9[1]

vars_time9[2]

vars_time9[3]

vars_time9[4]

vars_time9[5]

vars_time9[6]

vars_time9[7]

vars_time9[8]

vars_time9[9]

vars_time9[10]

vars_time9[11]

vars_time9[12]

vars_time9[13]

vars_time9[14]

vars_time9[15]

vars_time9[16]

vars_time9[17]

vars_time9[18]

vars_time9[19]

vars_time9[20]

vars_time9[21]

vars_time9[22]

vars_time9[23]

vars_time9[24]

vars_time9[25]

vars_time9[26]

vars_time9[27]

vars_time9[28]

vars_time9[29]

prepared DATA LOADER

Building recurrent keras model using a LSTM layer

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

reshape (Reshape) (None, 10, 30) 0

lstm (LSTM) (None, 10, 10) 1640

flatten (Flatten) (None, 100) 0

dense (Dense) (None, 64) 6464

dense_1 (Dense) (None, 2) 130

=================================================================

Total params: 8234 (32.16 KB)

Trainable params: 8234 (32.16 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

Factory : Booking method: ␛[1mPyKeras_LSTM␛[0m

:

: Setting up tf.keras

: Using TensorFlow version 2

: Use Keras version from TensorFlow : tf.keras

: Applying GPU option: gpu_options.allow_growth=True

: Loading Keras Model

: Loaded model from file: model_LSTM.h5

Factory : Booking method: ␛[1mBDTG␛[0m

:

: the option NegWeightTreatment=InverseBoostNegWeights does not exist for BoostType=Grad

: --> change to new default NegWeightTreatment=Pray

: Rebuilding Dataset dataset

: Building event vectors for type 2 Signal

: Dataset[dataset] : create input formulas for tree sgn

: Using variable vars_time0[0] from array expression vars_time0 of size 30

: Using variable vars_time1[0] from array expression vars_time1 of size 30

: Using variable vars_time2[0] from array expression vars_time2 of size 30

: Using variable vars_time3[0] from array expression vars_time3 of size 30

: Using variable vars_time4[0] from array expression vars_time4 of size 30

: Using variable vars_time5[0] from array expression vars_time5 of size 30

: Using variable vars_time6[0] from array expression vars_time6 of size 30

: Using variable vars_time7[0] from array expression vars_time7 of size 30

: Using variable vars_time8[0] from array expression vars_time8 of size 30

: Using variable vars_time9[0] from array expression vars_time9 of size 30

: Building event vectors for type 2 Background

: Dataset[dataset] : create input formulas for tree bkg

: Using variable vars_time0[0] from array expression vars_time0 of size 30

: Using variable vars_time1[0] from array expression vars_time1 of size 30

: Using variable vars_time2[0] from array expression vars_time2 of size 30

: Using variable vars_time3[0] from array expression vars_time3 of size 30

: Using variable vars_time4[0] from array expression vars_time4 of size 30

: Using variable vars_time5[0] from array expression vars_time5 of size 30

: Using variable vars_time6[0] from array expression vars_time6 of size 30

: Using variable vars_time7[0] from array expression vars_time7 of size 30

: Using variable vars_time8[0] from array expression vars_time8 of size 30

: Using variable vars_time9[0] from array expression vars_time9 of size 30

DataSetFactory : [dataset] : Number of events in input trees

:

:

: Number of training and testing events

: ---------------------------------------------------------------------------

: Signal -- training events : 1600

: Signal -- testing events : 400

: Signal -- training and testing events: 2000

: Background -- training events : 1600

: Background -- testing events : 400

: Background -- training and testing events: 2000

:

Factory : ␛[1mTrain all methods␛[0m

Factory : Train method: TMVA_LSTM for Classification

:

: Start of deep neural network training on CPU using MT, nthreads = 4

:

: ***** Deep Learning Network *****

DEEP NEURAL NETWORK: Depth = 4 Input = ( 10, 1, 30 ) Batch size = 100 Loss function = C

Layer 0 LSTM Layer: (NInput = 30, NState = 10, NTime = 10 ) Output = ( 100 , 10 , 10 )

Layer 1 RESHAPE Layer Input = ( 1 , 10 , 10 ) Output = ( 1 , 100 , 100 )

Layer 2 DENSE Layer: ( Input = 100 , Width = 64 ) Output = ( 1 , 100 , 64 ) Activation Function = Tanh

Layer 3 DENSE Layer: ( Input = 64 , Width = 1 ) Output = ( 1 , 100 , 1 ) Activation Function = Identity

: Using 2560 events for training and 640 for testing

: Compute initial loss on the validation data

: Training phase 1 of 1: Optimizer ADAM (beta1=0.9,beta2=0.999,eps=1e-07) Learning rate = 0.001 regularization 0 minimum error = 0.711598

: --------------------------------------------------------------

: Epoch | Train Err. Val. Err. t(s)/epoch t(s)/Loss nEvents/s Conv. Steps

: --------------------------------------------------------------

: Start epoch iteration ...

: 1 Minimum Test error found - save the configuration

: 1 | 0.703307 0.699943 0.660701 0.0435736 4051.03 0

: 2 Minimum Test error found - save the configuration

: 2 | 0.690502 0.687676 0.664275 0.0419723 4017.34 0

: 3 Minimum Test error found - save the configuration

: 3 | 0.684609 0.683907 0.638942 0.0413177 4183.23 0

: 4 | 0.675435 0.686458 0.593945 0.0397581 4511.11 1

: 5 | 0.669233 0.686385 0.58328 0.0416495 4615.7 2

: 6 Minimum Test error found - save the configuration

: 6 | 0.65044 0.660125 0.574416 0.0393857 4672.63 0

: 7 Minimum Test error found - save the configuration

: 7 | 0.619836 0.639189 0.564005 0.0391851 4763.54 0

: 8 | 0.593749 0.645963 0.559367 0.0391047 4805.27 1

: 9 Minimum Test error found - save the configuration

: 9 | 0.57412 0.627973 0.558399 0.0391817 4814.94 0

: 10 Minimum Test error found - save the configuration

: 10 | 0.561584 0.620373 0.560193 0.0390057 4796.74 0

:

: Elapsed time for training with 3200 events: 6.01 sec

: Evaluate deep neural network on CPU using batches with size = 100

:

TMVA_LSTM : [dataset] : Evaluation of TMVA_LSTM on training sample (3200 events)

: Elapsed time for evaluation of 3200 events: 0.208 sec

: Creating xml weight file: ␛[0;36mdataset/weights/TMVAClassification_TMVA_LSTM.weights.xml␛[0m

: Creating standalone class: ␛[0;36mdataset/weights/TMVAClassification_TMVA_LSTM.class.C␛[0m

Factory : Training finished

:

Factory : Train method: TMVA_DNN for Classification

:

: Start of deep neural network training on CPU using MT, nthreads = 4

:

: ***** Deep Learning Network *****

DEEP NEURAL NETWORK: Depth = 4 Input = ( 1, 1, 300 ) Batch size = 256 Loss function = C

Layer 0 DENSE Layer: ( Input = 300 , Width = 64 ) Output = ( 1 , 256 , 64 ) Activation Function = Tanh

Layer 1 DENSE Layer: ( Input = 64 , Width = 64 ) Output = ( 1 , 256 , 64 ) Activation Function = Tanh

Layer 2 DENSE Layer: ( Input = 64 , Width = 64 ) Output = ( 1 , 256 , 64 ) Activation Function = Tanh

Layer 3 DENSE Layer: ( Input = 64 , Width = 1 ) Output = ( 1 , 256 , 1 ) Activation Function = Identity

: Using 2560 events for training and 640 for testing

: Compute initial loss on the validation data

: Training phase 1 of 1: Optimizer ADAM (beta1=0.9,beta2=0.999,eps=1e-07) Learning rate = 0.001 regularization 0 minimum error = 0.881702

: --------------------------------------------------------------

: Epoch | Train Err. Val. Err. t(s)/epoch t(s)/Loss nEvents/s Conv. Steps

: --------------------------------------------------------------

: Start epoch iteration ...

: 1 Minimum Test error found - save the configuration

: 1 | 0.762028 0.716164 0.19384 0.0161108 14403.9 0

: 2 | 0.693746 0.719079 0.190757 0.0150775 14572 1

: 3 Minimum Test error found - save the configuration

: 3 | 0.676384 0.702461 0.189395 0.0154282 14715.4 0

: 4 Minimum Test error found - save the configuration

: 4 | 0.670099 0.701326 0.193289 0.0155043 14399.5 0

: 5 Minimum Test error found - save the configuration

: 5 | 0.669562 0.696336 0.191074 0.0154266 14574.7 0

: 6 | 0.666535 0.704782 0.189687 0.0150605 14659.8 1

: 7 | 0.671069 0.703864 0.189408 0.0150489 14682.3 2

: 8 Minimum Test error found - save the configuration

: 8 | 0.665261 0.692158 0.190015 0.0154629 14666.1 0

: 9 Minimum Test error found - save the configuration

: 9 | 0.660517 0.686126 0.191437 0.0155362 14553.7 0

: 10 | 0.666378 0.695635 0.19041 0.0151151 14604 1

: 11 | 0.665625 0.689145 0.189303 0.0149811 14685.5 2

: 12 | 0.664411 0.709501 0.190234 0.0149128 14601.8 3

: 13 Minimum Test error found - save the configuration

: 13 | 0.652018 0.678593 0.18852 0.0151742 14768.2 0

: 14 | 0.640669 0.694924 0.188145 0.0148274 14770.6 1

: 15 | 0.631917 0.710249 0.188316 0.0148564 14758.5 2

: 16 | 0.652417 0.707631 0.188238 0.0149037 14769.2 3

: 17 Minimum Test error found - save the configuration

: 17 | 0.649684 0.674899 0.189811 0.0154314 14680.6 0

: 18 | 0.640858 0.689889 0.191787 0.0151106 14489.8 1

: 19 | 0.629275 0.680427 0.19 0.0150154 14629.9 2

: 20 | 0.633441 0.695718 0.188291 0.0147331 14750.1 3

:

: Elapsed time for training with 3200 events: 3.82 sec

: Evaluate deep neural network on CPU using batches with size = 256

:

TMVA_DNN : [dataset] : Evaluation of TMVA_DNN on training sample (3200 events)

: Elapsed time for evaluation of 3200 events: 0.1 sec

: Creating xml weight file: ␛[0;36mdataset/weights/TMVAClassification_TMVA_DNN.weights.xml␛[0m

: Creating standalone class: ␛[0;36mdataset/weights/TMVAClassification_TMVA_DNN.class.C␛[0m

Factory : Training finished

:

Factory : Train method: PyKeras_LSTM for Classification

:

:

: ␛[1m================================================================␛[0m

: ␛[1mH e l p f o r M V A m e t h o d [ PyKeras_LSTM ] :␛[0m

:

: Keras is a high-level API for the Theano and Tensorflow packages.

: This method wraps the training and predictions steps of the Keras

: Python package for TMVA, so that dataloading, preprocessing and

: evaluation can be done within the TMVA system. To use this Keras

: interface, you have to generate a model with Keras first. Then,

: this model can be loaded and trained in TMVA.

:

:

: <Suppress this message by specifying "!H" in the booking option>

: ␛[1m================================================================␛[0m

:

: Split TMVA training data in 2560 training events and 640 validation events

: Training Model Summary

saved recurrent model model_LSTM.h5

Booking Keras model LSTM

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

reshape (Reshape) (None, 10, 30) 0

lstm (LSTM) (None, 10, 10) 1640

flatten (Flatten) (None, 100) 0

dense (Dense) (None, 64) 6464

dense_1 (Dense) (None, 2) 130

=================================================================

Total params: 8234 (32.16 KB)

Trainable params: 8234 (32.16 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

: Option SaveBestOnly: Only model weights with smallest validation loss will be stored

Epoch 1/10

1/26 [>.............................] - ETA: 41s - loss: 0.7342 - accuracy: 0.4600␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

8/26 [========>.....................] - ETA: 0s - loss: 0.7109 - accuracy: 0.5175 ␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

18/26 [===================>..........] - ETA: 0s - loss: 0.7079 - accuracy: 0.4861

Epoch 1: val_loss improved from inf to 0.69611, saving model to trained_model_LSTM.h5

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 3s 39ms/step - loss: 0.7051 - accuracy: 0.4871 - val_loss: 0.6961 - val_accuracy: 0.4938

Epoch 2/10

1/26 [>.............................] - ETA: 0s - loss: 0.6896 - accuracy: 0.5400␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

9/26 [=========>....................] - ETA: 0s - loss: 0.6942 - accuracy: 0.5178␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

16/26 [=================>............] - ETA: 0s - loss: 0.6949 - accuracy: 0.5156␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

23/26 [=========================>....] - ETA: 0s - loss: 0.6936 - accuracy: 0.5170

Epoch 2: val_loss improved from 0.69611 to 0.69112, saving model to trained_model_LSTM.h5

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 0s 11ms/step - loss: 0.6940 - accuracy: 0.5176 - val_loss: 0.6911 - val_accuracy: 0.5344

Epoch 3/10

1/26 [>.............................] - ETA: 0s - loss: 0.6955 - accuracy: 0.5300␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

10/26 [==========>...................] - ETA: 0s - loss: 0.6883 - accuracy: 0.5370␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

19/26 [====================>.........] - ETA: 0s - loss: 0.6850 - accuracy: 0.5489

Epoch 3: val_loss improved from 0.69112 to 0.66814, saving model to trained_model_LSTM.h5

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 0s 9ms/step - loss: 0.6816 - accuracy: 0.5617 - val_loss: 0.6681 - val_accuracy: 0.6187

Epoch 4/10

1/26 [>.............................] - ETA: 0s - loss: 0.6724 - accuracy: 0.5800␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

10/26 [==========>...................] - ETA: 0s - loss: 0.6526 - accuracy: 0.6460␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

19/26 [====================>.........] - ETA: 0s - loss: 0.6567 - accuracy: 0.6289

Epoch 4: val_loss improved from 0.66814 to 0.65971, saving model to trained_model_LSTM.h5

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 0s 9ms/step - loss: 0.6559 - accuracy: 0.6270 - val_loss: 0.6597 - val_accuracy: 0.5953

Epoch 5/10

1/26 [>.............................] - ETA: 0s - loss: 0.6628 - accuracy: 0.5800␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

11/26 [===========>..................] - ETA: 0s - loss: 0.6464 - accuracy: 0.6336␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

21/26 [=======================>......] - ETA: 0s - loss: 0.6394 - accuracy: 0.6448

Epoch 5: val_loss improved from 0.65971 to 0.63595, saving model to trained_model_LSTM.h5

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 0s 8ms/step - loss: 0.6377 - accuracy: 0.6438 - val_loss: 0.6359 - val_accuracy: 0.6453

Epoch 6/10

1/26 [>.............................] - ETA: 0s - loss: 0.6184 - accuracy: 0.6500␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

11/26 [===========>..................] - ETA: 0s - loss: 0.6061 - accuracy: 0.6773␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

20/26 [======================>.......] - ETA: 0s - loss: 0.6143 - accuracy: 0.6640

Epoch 6: val_loss improved from 0.63595 to 0.62056, saving model to trained_model_LSTM.h5

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 0s 8ms/step - loss: 0.6101 - accuracy: 0.6699 - val_loss: 0.6206 - val_accuracy: 0.6422

Epoch 7/10

1/26 [>.............................] - ETA: 0s - loss: 0.6480 - accuracy: 0.6700␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

9/26 [=========>....................] - ETA: 0s - loss: 0.6018 - accuracy: 0.6711␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

18/26 [===================>..........] - ETA: 0s - loss: 0.5967 - accuracy: 0.6828

Epoch 7: val_loss improved from 0.62056 to 0.59663, saving model to trained_model_LSTM.h5

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 0s 9ms/step - loss: 0.5889 - accuracy: 0.6891 - val_loss: 0.5966 - val_accuracy: 0.6891

Epoch 8/10

1/26 [>.............................] - ETA: 0s - loss: 0.5926 - accuracy: 0.6800␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

11/26 [===========>..................] - ETA: 0s - loss: 0.5622 - accuracy: 0.7227␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

20/26 [======================>.......] - ETA: 0s - loss: 0.5685 - accuracy: 0.7140

Epoch 8: val_loss improved from 0.59663 to 0.57793, saving model to trained_model_LSTM.h5

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 0s 9ms/step - loss: 0.5669 - accuracy: 0.7102 - val_loss: 0.5779 - val_accuracy: 0.7125

Epoch 9/10

1/26 [>.............................] - ETA: 0s - loss: 0.5539 - accuracy: 0.7400␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

10/26 [==========>...................] - ETA: 0s - loss: 0.5546 - accuracy: 0.7200␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

19/26 [====================>.........] - ETA: 0s - loss: 0.5513 - accuracy: 0.7195

Epoch 9: val_loss did not improve from 0.57793

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 0s 8ms/step - loss: 0.5511 - accuracy: 0.7207 - val_loss: 0.5844 - val_accuracy: 0.6828

Epoch 10/10

1/26 [>.............................] - ETA: 0s - loss: 0.5644 - accuracy: 0.7500␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

10/26 [==========>...................] - ETA: 0s - loss: 0.5433 - accuracy: 0.7390␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

18/26 [===================>..........] - ETA: 0s - loss: 0.5373 - accuracy: 0.7422

Epoch 10: val_loss improved from 0.57793 to 0.54873, saving model to trained_model_LSTM.h5

␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈␈

26/26 [==============================] - 0s 9ms/step - loss: 0.5363 - accuracy: 0.7383 - val_loss: 0.5487 - val_accuracy: 0.7172

: Getting training history for item:0 name = 'loss'

: Getting training history for item:1 name = 'accuracy'

: Getting training history for item:2 name = 'val_loss'

: Getting training history for item:3 name = 'val_accuracy'

: Elapsed time for training with 3200 events: 4.8 sec

: Setting up tf.keras

: Using TensorFlow version 2

: Use Keras version from TensorFlow : tf.keras

: Applying GPU option: gpu_options.allow_growth=True

: Disabled TF eager execution when evaluating model

: Loading Keras Model

: Loaded model from file: trained_model_LSTM.h5

PyKeras_LSTM : [dataset] : Evaluation of PyKeras_LSTM on training sample (3200 events)

: Elapsed time for evaluation of 3200 events: 0.359 sec

: Creating xml weight file: ␛[0;36mdataset/weights/TMVAClassification_PyKeras_LSTM.weights.xml␛[0m

: Creating standalone class: ␛[0;36mdataset/weights/TMVAClassification_PyKeras_LSTM.class.C␛[0m

Factory : Training finished

:

Factory : Train method: BDTG for Classification

:

:

: ␛[1m================================================================␛[0m

: ␛[1mH e l p f o r M V A m e t h o d [ BDTG ] :␛[0m

:

: ␛[1m--- Short description:␛[0m

:

: Boosted Decision Trees are a collection of individual decision

: trees which form a multivariate classifier by (weighted) majority

: vote of the individual trees. Consecutive decision trees are

: trained using the original training data set with re-weighted

: events. By default, the AdaBoost method is employed, which gives

: events that were misclassified in the previous tree a larger

: weight in the training of the following tree.

:

: Decision trees are a sequence of binary splits of the data sample

: using a single discriminant variable at a time. A test event

: ending up after the sequence of left-right splits in a final

: ("leaf") node is classified as either signal or background

: depending on the majority type of training events in that node.

:

: ␛[1m--- Performance optimisation:␛[0m

:

: By the nature of the binary splits performed on the individual

: variables, decision trees do not deal well with linear correlations

: between variables (they need to approximate the linear split in

: the two dimensional space by a sequence of splits on the two

: variables individually). Hence decorrelation could be useful

: to optimise the BDT performance.

:

: ␛[1m--- Performance tuning via configuration options:␛[0m

:

: The two most important parameters in the configuration are the

: minimal number of events requested by a leaf node as percentage of the

: number of training events (option "MinNodeSize" replacing the actual number

: of events "nEventsMin" as given in earlier versions

: If this number is too large, detailed features

: in the parameter space are hard to be modelled. If it is too small,

: the risk to overtrain rises and boosting seems to be less effective

: typical values from our current experience for best performance

: are between 0.5(%) and 10(%)

:

: The default minimal number is currently set to

: max(20, (N_training_events / N_variables^2 / 10))

: and can be changed by the user.

:

: The other crucial parameter, the pruning strength ("PruneStrength"),

: is also related to overtraining. It is a regularisation parameter

: that is used when determining after the training which splits

: are considered statistically insignificant and are removed. The

: user is advised to carefully watch the BDT screen output for

: the comparison between efficiencies obtained on the training and

: the independent test sample. They should be equal within statistical

: errors, in order to minimize statistical fluctuations in different samples.

:

: <Suppress this message by specifying "!H" in the booking option>

: ␛[1m================================================================␛[0m

:

BDTG : #events: (reweighted) sig: 1600 bkg: 1600

: #events: (unweighted) sig: 1600 bkg: 1600

: Training 100 Decision Trees ... patience please

: Elapsed time for training with 3200 events: 1.64 sec

BDTG : [dataset] : Evaluation of BDTG on training sample (3200 events)

: Elapsed time for evaluation of 3200 events: 0.0179 sec

: Creating xml weight file: ␛[0;36mdataset/weights/TMVAClassification_BDTG.weights.xml␛[0m

: Creating standalone class: ␛[0;36mdataset/weights/TMVAClassification_BDTG.class.C␛[0m

: data_RNN_CPU.root:/dataset/Method_BDT/BDTG

Factory : Training finished

:

: Ranking input variables (method specific)...

: No variable ranking supplied by classifier: TMVA_LSTM

: No variable ranking supplied by classifier: TMVA_DNN

: No variable ranking supplied by classifier: PyKeras_LSTM

BDTG : Ranking result (top variable is best ranked)

: --------------------------------------------

: Rank : Variable : Variable Importance

: --------------------------------------------

: 1 : vars_time6 : 2.170e-02

: 2 : vars_time8 : 2.105e-02

: 3 : vars_time7 : 2.066e-02

: 4 : vars_time7 : 2.059e-02

: 5 : vars_time8 : 2.037e-02

: 6 : vars_time8 : 1.868e-02

: 7 : vars_time7 : 1.821e-02

: 8 : vars_time7 : 1.765e-02

: 9 : vars_time8 : 1.758e-02

: 10 : vars_time9 : 1.744e-02

: 11 : vars_time0 : 1.687e-02

: 12 : vars_time7 : 1.679e-02

: 13 : vars_time9 : 1.665e-02

: 14 : vars_time8 : 1.636e-02

: 15 : vars_time9 : 1.630e-02

: 16 : vars_time9 : 1.625e-02

: 17 : vars_time6 : 1.531e-02

: 18 : vars_time8 : 1.504e-02

: 19 : vars_time8 : 1.489e-02

: 20 : vars_time7 : 1.434e-02

: 21 : vars_time5 : 1.381e-02

: 22 : vars_time8 : 1.352e-02

: 23 : vars_time8 : 1.346e-02

: 24 : vars_time6 : 1.311e-02

: 25 : vars_time9 : 1.290e-02

: 26 : vars_time9 : 1.225e-02

: 27 : vars_time0 : 1.224e-02

: 28 : vars_time7 : 1.205e-02

: 29 : vars_time4 : 1.199e-02

: 30 : vars_time5 : 1.193e-02

: 31 : vars_time9 : 1.175e-02

: 32 : vars_time9 : 1.171e-02

: 33 : vars_time5 : 1.139e-02

: 34 : vars_time6 : 1.126e-02

: 35 : vars_time6 : 1.119e-02

: 36 : vars_time5 : 1.114e-02

: 37 : vars_time0 : 1.084e-02

: 38 : vars_time5 : 1.044e-02

: 39 : vars_time8 : 1.018e-02

: 40 : vars_time7 : 9.927e-03

: 41 : vars_time6 : 9.759e-03

: 42 : vars_time6 : 9.755e-03

: 43 : vars_time5 : 9.513e-03

: 44 : vars_time9 : 9.416e-03

: 45 : vars_time0 : 8.988e-03

: 46 : vars_time1 : 8.977e-03

: 47 : vars_time6 : 8.768e-03

: 48 : vars_time7 : 8.760e-03

: 49 : vars_time4 : 8.704e-03

: 50 : vars_time7 : 8.605e-03

: 51 : vars_time9 : 8.554e-03

: 52 : vars_time7 : 8.468e-03

: 53 : vars_time5 : 8.338e-03

: 54 : vars_time9 : 7.890e-03

: 55 : vars_time0 : 7.886e-03

: 56 : vars_time8 : 7.725e-03

: 57 : vars_time9 : 7.667e-03

: 58 : vars_time8 : 7.553e-03

: 59 : vars_time0 : 7.398e-03

: 60 : vars_time9 : 7.212e-03

: 61 : vars_time1 : 7.197e-03

: 62 : vars_time7 : 6.883e-03

: 63 : vars_time6 : 6.815e-03

: 64 : vars_time8 : 6.748e-03

: 65 : vars_time7 : 6.706e-03

: 66 : vars_time1 : 6.696e-03

: 67 : vars_time5 : 6.490e-03

: 68 : vars_time0 : 6.484e-03

: 69 : vars_time5 : 6.466e-03

: 70 : vars_time0 : 6.433e-03

: 71 : vars_time8 : 6.416e-03

: 72 : vars_time0 : 6.403e-03

: 73 : vars_time4 : 6.252e-03

: 74 : vars_time2 : 6.211e-03

: 75 : vars_time6 : 6.208e-03

: 76 : vars_time3 : 5.830e-03

: 77 : vars_time7 : 5.812e-03

: 78 : vars_time9 : 5.769e-03

: 79 : vars_time7 : 5.655e-03

: 80 : vars_time4 : 5.638e-03

: 81 : vars_time1 : 5.569e-03

: 82 : vars_time6 : 5.488e-03

: 83 : vars_time2 : 5.483e-03

: 84 : vars_time4 : 5.474e-03

: 85 : vars_time1 : 5.363e-03

: 86 : vars_time1 : 5.342e-03

: 87 : vars_time3 : 5.206e-03

: 88 : vars_time8 : 5.199e-03

: 89 : vars_time3 : 5.106e-03

: 90 : vars_time5 : 5.035e-03

: 91 : vars_time9 : 4.980e-03

: 92 : vars_time8 : 4.916e-03

: 93 : vars_time3 : 4.771e-03

: 94 : vars_time8 : 4.695e-03

: 95 : vars_time2 : 4.643e-03

: 96 : vars_time4 : 4.628e-03

: 97 : vars_time8 : 4.565e-03

: 98 : vars_time2 : 4.498e-03

: 99 : vars_time3 : 4.108e-03

: 100 : vars_time4 : 3.909e-03

: 101 : vars_time8 : 3.842e-03

: 102 : vars_time7 : 3.552e-03

: 103 : vars_time0 : 3.412e-03

: 104 : vars_time6 : 3.325e-03

: 105 : vars_time0 : 0.000e+00

: 106 : vars_time0 : 0.000e+00

: 107 : vars_time0 : 0.000e+00

: 108 : vars_time0 : 0.000e+00

: 109 : vars_time0 : 0.000e+00

: 110 : vars_time0 : 0.000e+00

: 111 : vars_time0 : 0.000e+00

: 112 : vars_time0 : 0.000e+00

: 113 : vars_time0 : 0.000e+00

: 114 : vars_time0 : 0.000e+00

: 115 : vars_time0 : 0.000e+00

: 116 : vars_time0 : 0.000e+00

: 117 : vars_time0 : 0.000e+00

: 118 : vars_time0 : 0.000e+00

: 119 : vars_time0 : 0.000e+00

: 120 : vars_time0 : 0.000e+00

: 121 : vars_time0 : 0.000e+00

: 122 : vars_time0 : 0.000e+00

: 123 : vars_time0 : 0.000e+00

: 124 : vars_time0 : 0.000e+00

: 125 : vars_time1 : 0.000e+00

: 126 : vars_time1 : 0.000e+00

: 127 : vars_time1 : 0.000e+00

: 128 : vars_time1 : 0.000e+00

: 129 : vars_time1 : 0.000e+00

: 130 : vars_time1 : 0.000e+00

: 131 : vars_time1 : 0.000e+00

: 132 : vars_time1 : 0.000e+00

: 133 : vars_time1 : 0.000e+00

: 134 : vars_time1 : 0.000e+00

: 135 : vars_time1 : 0.000e+00

: 136 : vars_time1 : 0.000e+00

: 137 : vars_time1 : 0.000e+00

: 138 : vars_time1 : 0.000e+00

: 139 : vars_time1 : 0.000e+00

: 140 : vars_time1 : 0.000e+00

: 141 : vars_time1 : 0.000e+00

: 142 : vars_time1 : 0.000e+00

: 143 : vars_time1 : 0.000e+00

: 144 : vars_time1 : 0.000e+00

: 145 : vars_time1 : 0.000e+00

: 146 : vars_time1 : 0.000e+00

: 147 : vars_time1 : 0.000e+00

: 148 : vars_time1 : 0.000e+00

: 149 : vars_time2 : 0.000e+00

: 150 : vars_time2 : 0.000e+00

: 151 : vars_time2 : 0.000e+00

: 152 : vars_time2 : 0.000e+00

: 153 : vars_time2 : 0.000e+00

: 154 : vars_time2 : 0.000e+00

: 155 : vars_time2 : 0.000e+00

: 156 : vars_time2 : 0.000e+00

: 157 : vars_time2 : 0.000e+00

: 158 : vars_time2 : 0.000e+00

: 159 : vars_time2 : 0.000e+00

: 160 : vars_time2 : 0.000e+00

: 161 : vars_time2 : 0.000e+00

: 162 : vars_time2 : 0.000e+00

: 163 : vars_time2 : 0.000e+00

: 164 : vars_time2 : 0.000e+00

: 165 : vars_time2 : 0.000e+00

: 166 : vars_time2 : 0.000e+00

: 167 : vars_time2 : 0.000e+00

: 168 : vars_time2 : 0.000e+00

: 169 : vars_time2 : 0.000e+00

: 170 : vars_time2 : 0.000e+00

: 171 : vars_time2 : 0.000e+00

: 172 : vars_time2 : 0.000e+00

: 173 : vars_time2 : 0.000e+00

: 174 : vars_time2 : 0.000e+00

: 175 : vars_time3 : 0.000e+00

: 176 : vars_time3 : 0.000e+00

: 177 : vars_time3 : 0.000e+00

: 178 : vars_time3 : 0.000e+00

: 179 : vars_time3 : 0.000e+00

: 180 : vars_time3 : 0.000e+00

: 181 : vars_time3 : 0.000e+00

: 182 : vars_time3 : 0.000e+00

: 183 : vars_time3 : 0.000e+00

: 184 : vars_time3 : 0.000e+00

: 185 : vars_time3 : 0.000e+00

: 186 : vars_time3 : 0.000e+00

: 187 : vars_time3 : 0.000e+00

: 188 : vars_time3 : 0.000e+00

: 189 : vars_time3 : 0.000e+00

: 190 : vars_time3 : 0.000e+00

: 191 : vars_time3 : 0.000e+00

: 192 : vars_time3 : 0.000e+00

: 193 : vars_time3 : 0.000e+00

: 194 : vars_time3 : 0.000e+00

: 195 : vars_time3 : 0.000e+00

: 196 : vars_time3 : 0.000e+00

: 197 : vars_time3 : 0.000e+00

: 198 : vars_time3 : 0.000e+00

: 199 : vars_time3 : 0.000e+00

: 200 : vars_time4 : 0.000e+00

: 201 : vars_time4 : 0.000e+00

: 202 : vars_time4 : 0.000e+00

: 203 : vars_time4 : 0.000e+00

: 204 : vars_time4 : 0.000e+00

: 205 : vars_time4 : 0.000e+00

: 206 : vars_time4 : 0.000e+00

: 207 : vars_time4 : 0.000e+00

: 208 : vars_time4 : 0.000e+00

: 209 : vars_time4 : 0.000e+00

: 210 : vars_time4 : 0.000e+00

: 211 : vars_time4 : 0.000e+00

: 212 : vars_time4 : 0.000e+00

: 213 : vars_time4 : 0.000e+00

: 214 : vars_time4 : 0.000e+00

: 215 : vars_time4 : 0.000e+00

: 216 : vars_time4 : 0.000e+00

: 217 : vars_time4 : 0.000e+00

: 218 : vars_time4 : 0.000e+00

: 219 : vars_time4 : 0.000e+00

: 220 : vars_time4 : 0.000e+00

: 221 : vars_time4 : 0.000e+00

: 222 : vars_time4 : 0.000e+00

: 223 : vars_time5 : 0.000e+00

: 224 : vars_time5 : 0.000e+00

: 225 : vars_time5 : 0.000e+00

: 226 : vars_time5 : 0.000e+00

: 227 : vars_time5 : 0.000e+00

: 228 : vars_time5 : 0.000e+00

: 229 : vars_time5 : 0.000e+00

: 230 : vars_time5 : 0.000e+00

: 231 : vars_time5 : 0.000e+00

: 232 : vars_time5 : 0.000e+00

: 233 : vars_time5 : 0.000e+00

: 234 : vars_time5 : 0.000e+00

: 235 : vars_time5 : 0.000e+00

: 236 : vars_time5 : 0.000e+00

: 237 : vars_time5 : 0.000e+00

: 238 : vars_time5 : 0.000e+00

: 239 : vars_time5 : 0.000e+00

: 240 : vars_time5 : 0.000e+00

: 241 : vars_time5 : 0.000e+00

: 242 : vars_time5 : 0.000e+00

: 243 : vars_time6 : 0.000e+00

: 244 : vars_time6 : 0.000e+00

: 245 : vars_time6 : 0.000e+00

: 246 : vars_time6 : 0.000e+00

: 247 : vars_time6 : 0.000e+00

: 248 : vars_time6 : 0.000e+00

: 249 : vars_time6 : 0.000e+00

: 250 : vars_time6 : 0.000e+00

: 251 : vars_time6 : 0.000e+00

: 252 : vars_time6 : 0.000e+00

: 253 : vars_time6 : 0.000e+00

: 254 : vars_time6 : 0.000e+00

: 255 : vars_time6 : 0.000e+00

: 256 : vars_time6 : 0.000e+00

: 257 : vars_time6 : 0.000e+00

: 258 : vars_time6 : 0.000e+00

: 259 : vars_time6 : 0.000e+00

: 260 : vars_time6 : 0.000e+00

: 261 : vars_time7 : 0.000e+00

: 262 : vars_time7 : 0.000e+00

: 263 : vars_time7 : 0.000e+00

: 264 : vars_time7 : 0.000e+00

: 265 : vars_time7 : 0.000e+00

: 266 : vars_time7 : 0.000e+00

: 267 : vars_time7 : 0.000e+00

: 268 : vars_time7 : 0.000e+00

: 269 : vars_time7 : 0.000e+00

: 270 : vars_time7 : 0.000e+00

: 271 : vars_time7 : 0.000e+00

: 272 : vars_time7 : 0.000e+00

: 273 : vars_time7 : 0.000e+00

: 274 : vars_time7 : 0.000e+00

: 275 : vars_time8 : 0.000e+00

: 276 : vars_time8 : 0.000e+00

: 277 : vars_time8 : 0.000e+00

: 278 : vars_time8 : 0.000e+00

: 279 : vars_time8 : 0.000e+00

: 280 : vars_time8 : 0.000e+00

: 281 : vars_time8 : 0.000e+00

: 282 : vars_time8 : 0.000e+00

: 283 : vars_time8 : 0.000e+00

: 284 : vars_time8 : 0.000e+00

: 285 : vars_time8 : 0.000e+00

: 286 : vars_time9 : 0.000e+00

: 287 : vars_time9 : 0.000e+00

: 288 : vars_time9 : 0.000e+00

: 289 : vars_time9 : 0.000e+00

: 290 : vars_time9 : 0.000e+00

: 291 : vars_time9 : 0.000e+00

: 292 : vars_time9 : 0.000e+00

: 293 : vars_time9 : 0.000e+00

: 294 : vars_time9 : 0.000e+00

: 295 : vars_time9 : 0.000e+00

: 296 : vars_time9 : 0.000e+00

: 297 : vars_time9 : 0.000e+00

: 298 : vars_time9 : 0.000e+00

: 299 : vars_time9 : 0.000e+00

: 300 : vars_time9 : 0.000e+00

: --------------------------------------------

TH1.Print Name = TrainingHistory_TMVA_LSTM_trainingError, Entries= 0, Total sum= 6.42282

TH1.Print Name = TrainingHistory_TMVA_LSTM_valError, Entries= 0, Total sum= 6.63799

TH1.Print Name = TrainingHistory_TMVA_DNN_trainingError, Entries= 0, Total sum= 13.2619

TH1.Print Name = TrainingHistory_TMVA_DNN_valError, Entries= 0, Total sum= 13.9489

TH1.Print Name = TrainingHistory_PyKeras_LSTM_'accuracy', Entries= 0, Total sum= 6.36523

TH1.Print Name = TrainingHistory_PyKeras_LSTM_'loss', Entries= 0, Total sum= 6.22752

TH1.Print Name = TrainingHistory_PyKeras_LSTM_'val_accuracy', Entries= 0, Total sum= 6.33125

TH1.Print Name = TrainingHistory_PyKeras_LSTM_'val_loss', Entries= 0, Total sum= 6.27928

Factory : === Destroy and recreate all methods via weight files for testing ===

:

: Reading weight file: ␛[0;36mdataset/weights/TMVAClassification_TMVA_LSTM.weights.xml␛[0m

: Reading weight file: ␛[0;36mdataset/weights/TMVAClassification_TMVA_DNN.weights.xml␛[0m

: Reading weight file: ␛[0;36mdataset/weights/TMVAClassification_PyKeras_LSTM.weights.xml␛[0m

: Reading weight file: ␛[0;36mdataset/weights/TMVAClassification_BDTG.weights.xml␛[0m

Factory : ␛[1mTest all methods␛[0m

Factory : Test method: TMVA_LSTM for Classification performance

:

: Evaluate deep neural network on CPU using batches with size = 800

:

TMVA_LSTM : [dataset] : Evaluation of TMVA_LSTM on testing sample (800 events)

: Elapsed time for evaluation of 800 events: 0.0526 sec

Factory : Test method: TMVA_DNN for Classification performance

:

: Evaluate deep neural network on CPU using batches with size = 800

:

TMVA_DNN : [dataset] : Evaluation of TMVA_DNN on testing sample (800 events)

: Elapsed time for evaluation of 800 events: 0.0218 sec

Factory : Test method: PyKeras_LSTM for Classification performance

:

: Setting up tf.keras

: Using TensorFlow version 2

: Use Keras version from TensorFlow : tf.keras

: Applying GPU option: gpu_options.allow_growth=True

: Disabled TF eager execution when evaluating model

: Loading Keras Model

: Loaded model from file: trained_model_LSTM.h5

PyKeras_LSTM : [dataset] : Evaluation of PyKeras_LSTM on testing sample (800 events)

: Elapsed time for evaluation of 800 events: 0.254 sec

Factory : Test method: BDTG for Classification performance

:

BDTG : [dataset] : Evaluation of BDTG on testing sample (800 events)

: Elapsed time for evaluation of 800 events: 0.00678 sec

Factory : ␛[1mEvaluate all methods␛[0m

Factory : Evaluate classifier: TMVA_LSTM

:

TMVA_LSTM : [dataset] : Loop over test events and fill histograms with classifier response...

:

: Evaluate deep neural network on CPU using batches with size = 1000

:

: Dataset[dataset] : variable plots are not produces ! The number of variables is 300 , it is larger than 200

Factory : Evaluate classifier: TMVA_DNN

:

TMVA_DNN : [dataset] : Loop over test events and fill histograms with classifier response...

:

: Evaluate deep neural network on CPU using batches with size = 1000

:

: Dataset[dataset] : variable plots are not produces ! The number of variables is 300 , it is larger than 200

Factory : Evaluate classifier: PyKeras_LSTM

:

PyKeras_LSTM : [dataset] : Loop over test events and fill histograms with classifier response...

:

: Dataset[dataset] : variable plots are not produces ! The number of variables is 300 , it is larger than 200

Factory : Evaluate classifier: BDTG

:

BDTG : [dataset] : Loop over test events and fill histograms with classifier response...

:

: Dataset[dataset] : variable plots are not produces ! The number of variables is 300 , it is larger than 200

:

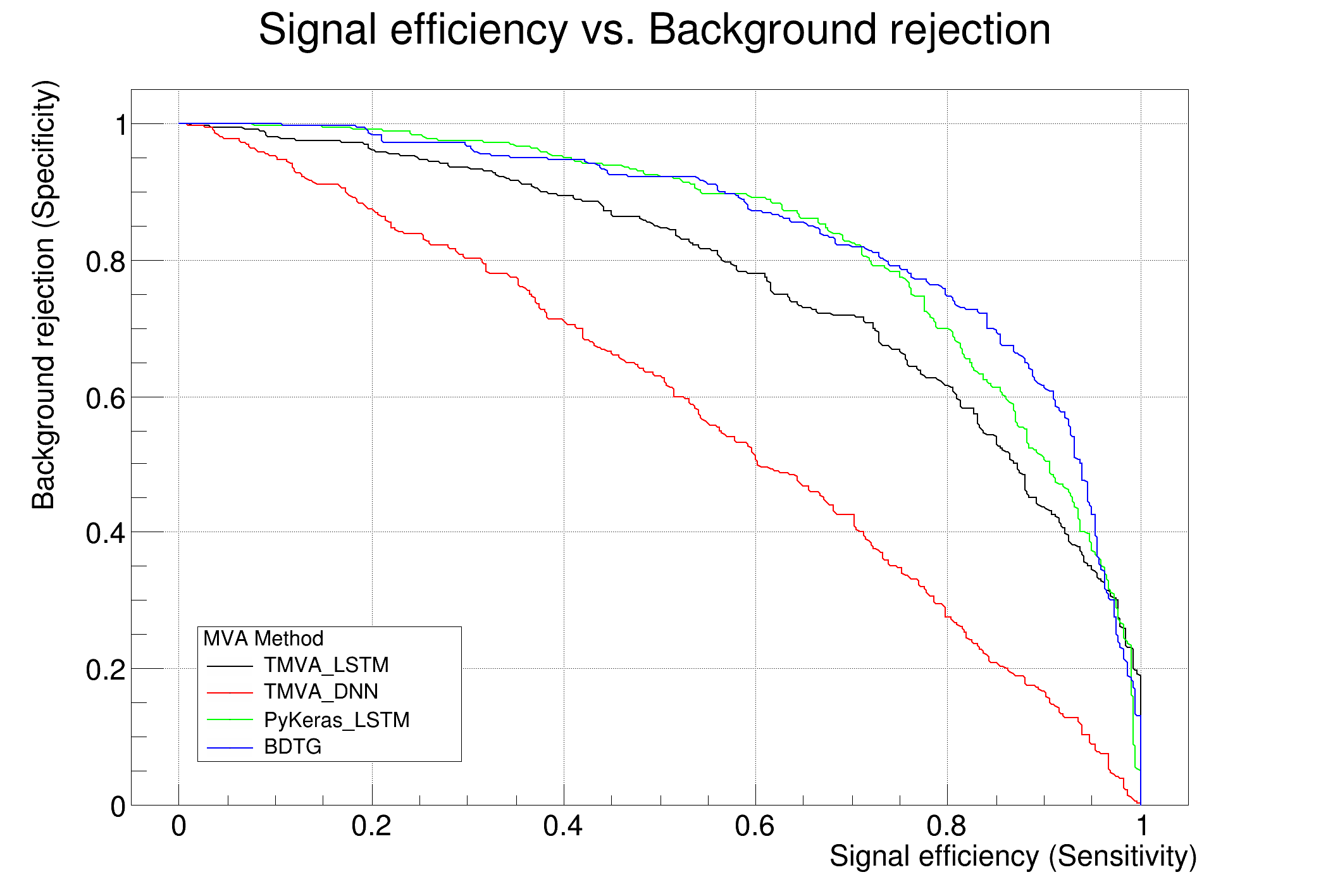

: Evaluation results ranked by best signal efficiency and purity (area)

: -------------------------------------------------------------------------------------------------------------------

: DataSet MVA

: Name: Method: ROC-integ

: dataset BDTG : 0.849

: dataset PyKeras_LSTM : 0.787

: dataset TMVA_LSTM : 0.778

: dataset TMVA_DNN : 0.660

: -------------------------------------------------------------------------------------------------------------------

:

: Testing efficiency compared to training efficiency (overtraining check)

: -------------------------------------------------------------------------------------------------------------------

: DataSet MVA Signal efficiency: from test sample (from training sample)

: Name: Method: @B=0.01 @B=0.10 @B=0.30

: -------------------------------------------------------------------------------------------------------------------

: dataset BDTG : 0.190 (0.315) 0.565 (0.663) 0.844 (0.883)

: dataset PyKeras_LSTM : 0.062 (0.108) 0.455 (0.497) 0.727 (0.758)

: dataset TMVA_LSTM : 0.075 (0.085) 0.378 (0.422) 0.723 (0.723)

: dataset TMVA_DNN : 0.045 (0.033) 0.245 (0.202) 0.503 (0.518)

: -------------------------------------------------------------------------------------------------------------------

:

Dataset:dataset : Created tree 'TestTree' with 800 events

:

Dataset:dataset : Created tree 'TrainTree' with 3200 events

:

Factory : ␛[1mThank you for using TMVA!␛[0m

: ␛[1mFor citation information, please visit: http://tmva.sf.net/citeTMVA.html␛[0m

nthreads = 4