Configure a Spark connection and fill two histograms distributedly.

Configure a Spark connection and fill two histograms distributedly.

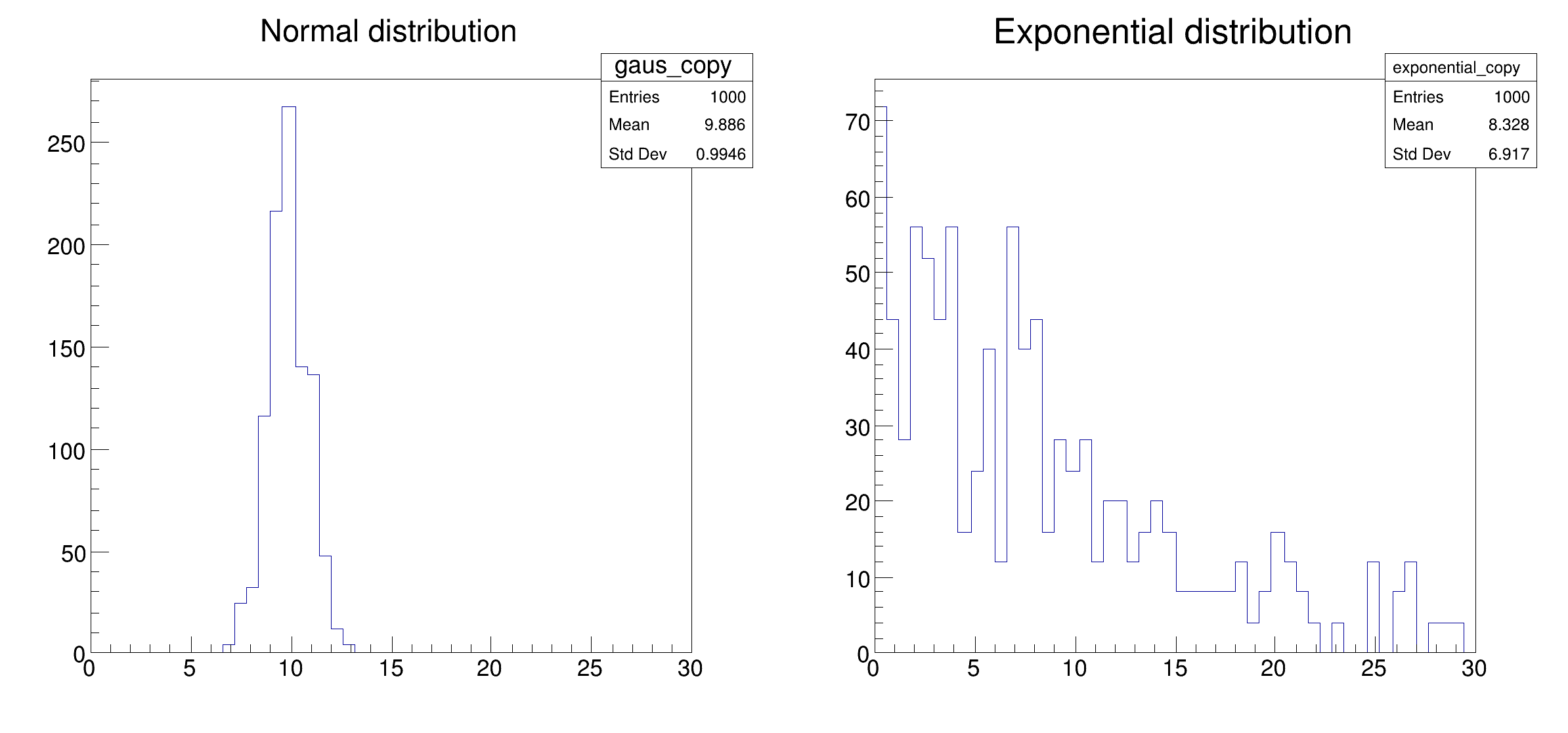

This tutorial shows the ingredients needed to setup the connection to a Spark cluster, namely a SparkConf object holding configuration parameters and a SparkContext object created with the desired options. After this initial setup, an RDataFrame with distributed capabilities is created and connected to the SparkContext instance. Finally, a couple of histograms are drawn from the created columns in the dataset.

import pyspark

import ROOT

RDataFrame = ROOT.RDF.Experimental.Distributed.Spark.RDataFrame

sparkconf = pyspark.SparkConf().setAll(

{"spark.app.name": "distrdf001_spark_connection",

"spark.master": "local[4]", }.items())

sparkcontext = pyspark.SparkContext(conf=sparkconf)

df = RDataFrame(1000, sparkcontext=sparkcontext)

ROOT.gRandom.SetSeed(1)

df_1 = df.Define("gaus", "gRandom->Gaus(10, 1)").Define("exponential", "gRandom->Exp(10)")

h_gaus = df_1.Histo1D(("gaus", "Normal distribution", 50, 0, 30), "gaus")

h_exp = df_1.Histo1D(("exponential", "Exponential distribution", 50, 0, 30), "exponential")

c = ROOT.TCanvas("distrdf001", "distrdf001", 800, 400)

c.Divide(2, 1)

c.cd(1)

h_gaus.DrawCopy()

c.cd(2)

h_exp.DrawCopy()

c.SaveAs("distrdf001_spark_connection.png")

print("Saved figure to distrdf001_spark_connection.png")

- Date

- March 2021

- Author

- Vincenzo Eduardo Padulano

Definition in file distrdf001_spark_connection.py.

Configure a Spark connection and fill two histograms distributedly.

Configure a Spark connection and fill two histograms distributedly.