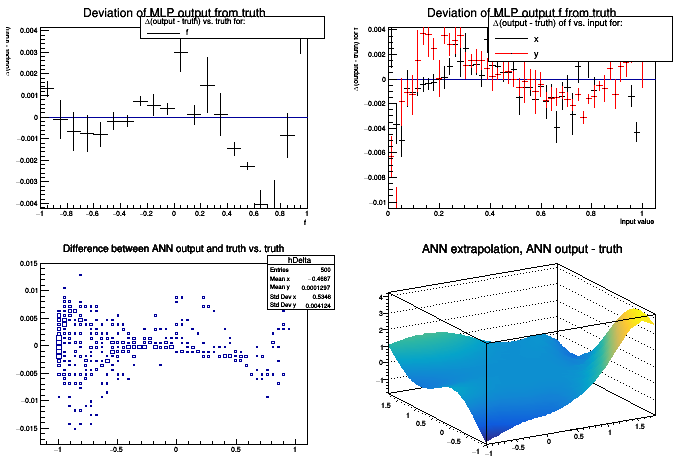

This macro shows the use of an ANN for regression analysis: given a set {i} of input vectors i and a set {o} of output vectors o, one looks for the unknown function f(i)=o.

The ANN can approximate this function; TMLPAnalyzer::DrawTruthDeviation methods can be used to evaluate the quality of the approximation.

For simplicity, we use a known function to create test and training data. In reality this function is usually not known, and the data comes e.g. from measurements.

Processing /mnt/build/workspace/root-makedoc-v612/rootspi/rdoc/src/v6-12-00-patches/tutorials/mlp/mlpRegression.C...

Network with structure: x,y:10:8:f

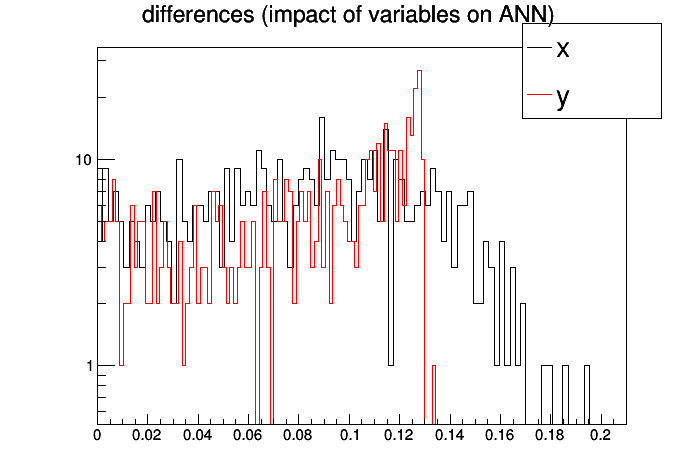

inputs with low values in the differences plot may not be needed

x -> 0.0833304 +/- 0.0439786

y -> 0.0816078 +/- 0.0398608

return sin((1.7+x)*(x-0.3)-2.3*(y+0.7));

}

void mlpRegression() {

for (

Int_t i=0; i<1000; i++) {

t->

Fill(x,y,theUnknownFunction(x,y));

}

"Entry$%2","(Entry$%2)==0");

mlp->

Train(150,

"graph update=10");

mlpa->

GetIOTree()->

Draw(

"Out.Out0-True.True0:True.True0>>hDelta",

"",

"goff");

hDelta->

SetTitle(

"Difference between ANN output and truth vs. truth");

for (

Int_t ix=0; ix<15; ix++) {

v[0]=ix/5.-1.;

for (

Int_t iy=0; iy<15; iy++) {

v[1]=iy/5.-1.;

vx[idx]=v[0];

vy[idx]=v[1];

delta[idx]=mlp->

Evaluate(0, v)-theUnknownFunction(v[0],v[1]);

}

}

"ANN extrapolation, ANN output - truth",

225, vx, vy, delta);

g2Extrapolate->

Draw(

"TRI2");

}

- Author

- Axel Naumann, 2005-02-02

Definition in file mlpRegression.C.