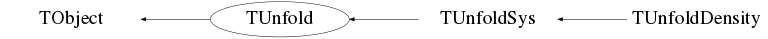

class TUnfold: public TObject

TUnfold is used to decompose a measurement y into several sources x given the measurement uncertainties and a matrix of migrations A * For most applications, it is better to use TUnfoldDensity * * instead of using TUnfoldSys or TUnfold * If you use this software, please consider the following citation S.Schmitt, JINST 7 (2012) T10003 [arXiv:1205.6201] More documentation and updates are available on http://www.desy.de/~sschmitt A short summary of the algorithm is given in the following: the "best" x matching the measurement y within errors is determined by minimizing the function L1+L2+L3 where L1 = (y-Ax)# Vyy^-1 (y-Ax) L2 = tau^2 (L(x-x0))# L(x-x0) L3 = lambda sum_i(y_i -(Ax)_i) [the notation # means that the matrix is transposed ] The term L1 is familiar from a least-square minimisation The term L2 defines the regularisation (smootheness condition on x), where the parameter tau^2 gives the strength of teh regularisation The term L3 is an optional area constraint with Lagrangian parameter lambda, ensuring that that the normalisation of x is consistent with the normalisation of y The method can be applied to a very large number of problems, where the measured distribution y is a linear superposition of several Monte Carlo shapes Input from measurement: y: vector of measured quantities (dimension ny) Vyy: covariance matrix for y (dimension ny x ny) in many cases V is diagonal and calculated from the errors of y From simulation: A: migration matrix (dimension ny x nx) Result x: unknown underlying distribution (dimension nx) The error matrix of x, V_xx, is also determined Regularisation tau: parameter, defining the regularisation strength L: matrix of regularisation conditions (dimension nl x nx) depends on the structure of the input data x0: bias distribution, from simulation Preservation of the area lambda: lagrangian multiplier y_i: one component of the vector y (Ax)_i: one component of the vector Ax Determination of the unfolding result x: (a) not constrained: minimisation is performed as a function of x for fixed lambda=0 or (b) constrained: stationary point is found as a function of x and lambda The constraint can be useful to reduce biases on the result x in cases where the vector y follows non-Gaussian probability densities (example: Poisson statistics at counting experiments in particle physics) Some random examples: (1) measure a cross-section as a function of, say, E_T(detector) and unfold it to obtain the underlying distribution E_T(generator) (2) measure a lifetime distribution and unfold the contributions from different flavours (3) measure the transverse mass and decay angle and unfold for the true mass distribution plus background Documentation Some technical documentation is available here: http://www.desy.de/~sschmitt Note: For most applications it is better to use the derived class TUnfoldSys or even better TUnfoldDensity TUnfoldSys extends the functionality of TUnfold such that systematic errors are propagated to teh result and that the unfolding can be done with proper background subtraction TUnfoldDensity extends further the functionality of TUnfoldSys complex binning schemes are supported The binning of input histograms is handeld consistently: (1) the regularisation may be done by density, i.e respecting the bin widths (2) methods are provided which preserve the proper binning of the result histograms Implementation The result of the unfolding is calculated as follows: Lsquared = L#L regularisation conditions squared epsilon_j = sum_i A_ij vector of efficiencies E^-1 = ((A# Vyy^-1 A)+tau^2 Lsquared) x = E (A# Vyy^-1 y + tau^2 Lsquared x0 +lambda/2 * epsilon) is the result The derivatives dx_k/dy_i dx_k/dA_ij dx_k/d(tau^2) are calculated for further usage. The covariance matrix V_xx is calculated as: Vxx_ij = sum_kl dx_i/dy_k Vyy_kl dx_j/dy_l Warning: The algorithm is based on "standard" matrix inversion, with the known limitations in numerical accuracy and computing cost for matrices with large dimensions. Thus the algorithm should not used for large dimensions of x and y nx should not be much larger than 200 ny should not be much larger than 1000 Proper choice of tau One of the difficult questions is about the choice of tau. The method implemented in TUnfold is the L-curve method: a two-dimensional curve is plotted x-axis: log10(chisquare) y-axis: log10(regularisation condition) In many cases this curve has an L-shape. The best choice of tau is in the kink of the L Within TUnfold a simple version of the L-curve analysis is available. It tests a given number of points in a predefined tau-range and searches for the maximum of the curvature in the L-curve (kink position). if no tau range is given, the range of the scan is determined automatically A nice overview of the L-curve method is given in: The L-curve and Its Use in the Numerical Treatment of Inverse Problems (2000) by P. C. Hansen, in Computational Inverse Problems in Electrocardiology, ed. P. Johnston, Advances in Computational Bioengineering http://www.imm.dtu.dk/~pch/TR/Lcurve.ps Alternative Regularisation conditions Regularisation is needed for most unfolding problems, in order to avoid large oscillations and large correlations on the output bins. It means that some extra conditions are applied on the output bins Within TUnfold these conditions are posed on the difference (x-x0), where x: unfolding output x0: the bias distribution, by default calculated from the input matrix A. There is a method SetBias() to change the bias distribution. The 3rd argument to DoUnfold() is a scale factor applied to the bias bias_default[j] = sum_i A[i][j] x0[j] = scaleBias*bias[j] The scale factor can be used to (a) completely suppress the bias by setting it to zero (b) compensate differences in the normalisation between data and Monte Carlo If the regularisation is strong, i.e. large parameter tau, then the distribution x or its derivatives will look like the bias distribution. If the parameter tau is small, the distribution x is independent of the bias. Three basic types of regularisation are implemented in TUnfold condition regularisation kRegModeNone none kRegModeSize minimize the size of (x-x0) kRegModeDerivative minimize the 1st derivative of (x-x0) kRegModeCurvature minimize the 2nd derivative of (x-x0) kRegModeSize is the regularisation scheme which often is found in literature. The second derivative is often named curvature. Sometimes the bias is not discussed, equivalent to a bias scale factor of zero. The regularisation schemes kRegModeDerivative and kRegModeCurvature have the nice feature that they create correlations between x-bins, whereas the non-regularized unfolding tends to create negative correlations between bins. For these regularisation schemes the parameter tau could be tuned such that the correlations are smallest, as an alternative to the L-curve method. If kRegModeSize is chosen or if x is a smooth function through all bins, the regularisation condition can be set on all bins together by giving the appropriate argument in the constructor (see examples above). If x is composed of independent groups of bins (for example, signal and background binning in two variables), it may be necessary to set regularisation conditions for the individual groups of bins. In this case, give kRegModeNone in the constructor and specify the bin grouping with calls to RegularizeBins() specify a 1-dimensional group of bins RegularizeBins2D() specify a 2-dimensional group of bins Note, the class TUnfoldDensity provides an automatic setup of complex regularisation schemes For ultimate flexibility, the regularisation condition can be set on each bin individually -> give kRegModeNone in the constructor and use RegularizeSize() regularize one bin RegularizeDerivative() regularize the slope given by two bins RegularizeCurvature() regularize the curvature given by three bins AddRegularisationCondition() define an arbitrary regulatisation condition

Function Members (Methods)

public:

| virtual | ~TUnfold() |

| void | TObject::AbstractMethod(const char* method) const |

| virtual void | TObject::AppendPad(Option_t* option = "") |

| virtual void | TObject::Browse(TBrowser* b) |

| static TClass* | Class() |

| virtual const char* | TObject::ClassName() const |

| virtual void | TObject::Clear(Option_t* = "") |

| virtual TObject* | TObject::Clone(const char* newname = "") const |

| virtual Int_t | TObject::Compare(const TObject* obj) const |

| virtual void | TObject::Copy(TObject& object) const |

| virtual void | TObject::Delete(Option_t* option = "")MENU |

| virtual Int_t | TObject::DistancetoPrimitive(Int_t px, Int_t py) |

| virtual Double_t | DoUnfold(Double_t tau) |

| Double_t | DoUnfold(Double_t tau, const TH1* hist_y, Double_t scaleBias = 0.) |

| virtual void | TObject::Draw(Option_t* option = "") |

| virtual void | TObject::DrawClass() constMENU |

| virtual TObject* | TObject::DrawClone(Option_t* option = "") constMENU |

| virtual void | TObject::Dump() constMENU |

| virtual void | TObject::Error(const char* method, const char* msgfmt) const |

| virtual void | TObject::Execute(const char* method, const char* params, Int_t* error = 0) |

| virtual void | TObject::Execute(TMethod* method, TObjArray* params, Int_t* error = 0) |

| virtual void | TObject::ExecuteEvent(Int_t event, Int_t px, Int_t py) |

| virtual void | TObject::Fatal(const char* method, const char* msgfmt) const |

| virtual TObject* | TObject::FindObject(const char* name) const |

| virtual TObject* | TObject::FindObject(const TObject* obj) const |

| void | GetBias(TH1* bias, const Int_t* binMap = 0) const |

| Double_t | GetChi2A() const |

| Double_t | GetChi2L() const |

| virtual Option_t* | TObject::GetDrawOption() const |

| static Long_t | TObject::GetDtorOnly() |

| void | GetEmatrix(TH2* ematrix, const Int_t* binMap = 0) const |

| Double_t | GetEpsMatrix() const |

| void | GetFoldedOutput(TH1* folded, const Int_t* binMap = 0) const |

| virtual const char* | TObject::GetIconName() const |

| void | GetInput(TH1* inputData, const Int_t* binMap = 0) const |

| void | GetInputInverseEmatrix(TH2* ematrix) |

| void | GetL(TH2* l) const |

| virtual Double_t | GetLcurveX() const |

| virtual Double_t | GetLcurveY() const |

| void | GetLsquared(TH2* lsquared) const |

| virtual const char* | TObject::GetName() const |

| Int_t | GetNdf() const |

| void | GetNormalisationVector(TH1* s, const Int_t* binMap = 0) const |

| Int_t | GetNpar() const |

| Int_t | GetNr() const |

| virtual char* | TObject::GetObjectInfo(Int_t px, Int_t py) const |

| static Bool_t | TObject::GetObjectStat() |

| virtual Option_t* | TObject::GetOption() const |

| void | GetOutput(TH1* output, const Int_t* binMap = 0) const |

| void | GetProbabilityMatrix(TH2* A, TUnfold::EHistMap histmap) const |

| Double_t | GetRhoAvg() const |

| Double_t | GetRhoI(TH1* rhoi, const Int_t* binMap = 0, TH2* invEmat = 0) const |

| void | GetRhoIJ(TH2* rhoij, const Int_t* binMap = 0) const |

| Double_t | GetRhoMax() const |

| Double_t | GetTau() const |

| virtual const char* | TObject::GetTitle() const |

| static const char* | GetTUnfoldVersion() |

| virtual UInt_t | TObject::GetUniqueID() const |

| virtual Bool_t | TObject::HandleTimer(TTimer* timer) |

| virtual ULong_t | TObject::Hash() const |

| virtual void | TObject::Info(const char* method, const char* msgfmt) const |

| virtual Bool_t | TObject::InheritsFrom(const char* classname) const |

| virtual Bool_t | TObject::InheritsFrom(const TClass* cl) const |

| virtual void | TObject::Inspect() constMENU |

| void | TObject::InvertBit(UInt_t f) |

| virtual TClass* | IsA() const |

| virtual Bool_t | TObject::IsEqual(const TObject* obj) const |

| virtual Bool_t | TObject::IsFolder() const |

| Bool_t | TObject::IsOnHeap() const |

| virtual Bool_t | TObject::IsSortable() const |

| Bool_t | TObject::IsZombie() const |

| virtual void | TObject::ls(Option_t* option = "") const |

| void | TObject::MayNotUse(const char* method) const |

| virtual Bool_t | TObject::Notify() |

| void | TObject::Obsolete(const char* method, const char* asOfVers, const char* removedFromVers) const |

| void | TObject::operator delete(void* ptr) |

| void | TObject::operator delete(void* ptr, void* vp) |

| void | TObject::operator delete[](void* ptr) |

| void | TObject::operator delete[](void* ptr, void* vp) |

| void* | TObject::operator new(size_t sz) |

| void* | TObject::operator new(size_t sz, void* vp) |

| void* | TObject::operator new[](size_t sz) |

| void* | TObject::operator new[](size_t sz, void* vp) |

| TUnfold& | operator=(const TUnfold&) |

| virtual void | TObject::Paint(Option_t* option = "") |

| virtual void | TObject::Pop() |

| virtual void | TObject::Print(Option_t* option = "") const |

| virtual Int_t | TObject::Read(const char* name) |

| virtual void | TObject::RecursiveRemove(TObject* obj) |

| Int_t | RegularizeBins(int start, int step, int nbin, TUnfold::ERegMode regmode) |

| Int_t | RegularizeBins2D(int start_bin, int step1, int nbin1, int step2, int nbin2, TUnfold::ERegMode regmode) |

| Int_t | RegularizeCurvature(int left_bin, int center_bin, int right_bin, Double_t scale_left = 1., Double_t scale_right = 1.) |

| Int_t | RegularizeDerivative(int left_bin, int right_bin, Double_t scale = 1.) |

| Int_t | RegularizeSize(int bin, Double_t scale = 1.) |

| void | TObject::ResetBit(UInt_t f) |

| virtual void | TObject::SaveAs(const char* filename = "", Option_t* option = "") constMENU |

| virtual void | TObject::SavePrimitive(ostream& out, Option_t* option = "") |

| virtual Int_t | ScanLcurve(Int_t nPoint, Double_t tauMin, Double_t tauMax, TGraph** lCurve, TSpline** logTauX = 0, TSpline** logTauY = 0) |

| void | SetBias(const TH1* bias) |

| void | TObject::SetBit(UInt_t f) |

| void | TObject::SetBit(UInt_t f, Bool_t set) |

| void | SetConstraint(TUnfold::EConstraint constraint) |

| virtual void | TObject::SetDrawOption(Option_t* option = "")MENU |

| static void | TObject::SetDtorOnly(void* obj) |

| void | SetEpsMatrix(Double_t eps) |

| virtual Int_t | SetInput(const TH1* hist_y, Double_t scaleBias = 0., Double_t oneOverZeroError = 0., const TH2* hist_vyy = 0, const TH2* hist_vyy_inv = 0) |

| static void | TObject::SetObjectStat(Bool_t stat) |

| virtual void | TObject::SetUniqueID(UInt_t uid) |

| virtual void | ShowMembers(TMemberInspector& insp) const |

| virtual void | Streamer(TBuffer&) |

| void | StreamerNVirtual(TBuffer& ClassDef_StreamerNVirtual_b) |

| virtual void | TObject::SysError(const char* method, const char* msgfmt) const |

| Bool_t | TObject::TestBit(UInt_t f) const |

| Int_t | TObject::TestBits(UInt_t f) const |

| TUnfold(const TUnfold&) | |

| TUnfold(const TH2* hist_A, TUnfold::EHistMap histmap, TUnfold::ERegMode regmode = kRegModeSize, TUnfold::EConstraint constraint = kEConstraintArea) | |

| virtual void | TObject::UseCurrentStyle() |

| virtual void | TObject::Warning(const char* method, const char* msgfmt) const |

| virtual Int_t | TObject::Write(const char* name = 0, Int_t option = 0, Int_t bufsize = 0) |

| virtual Int_t | TObject::Write(const char* name = 0, Int_t option = 0, Int_t bufsize = 0) const |

protected:

private:

| void | InitTUnfold() |

Data Members

public:

| static TObject::<anonymous> | TObject::kBitMask | |

| static TObject::EStatusBits | TObject::kCanDelete | |

| static TObject::EStatusBits | TObject::kCannotPick | |

| static TUnfold::EConstraint | kEConstraintArea | |

| static TUnfold::EConstraint | kEConstraintNone | |

| static TObject::EStatusBits | TObject::kHasUUID | |

| static TUnfold::EHistMap | kHistMapOutputHoriz | |

| static TUnfold::EHistMap | kHistMapOutputVert | |

| static TObject::EStatusBits | TObject::kInvalidObject | |

| static TObject::<anonymous> | TObject::kIsOnHeap | |

| static TObject::EStatusBits | TObject::kIsReferenced | |

| static TObject::EStatusBits | TObject::kMustCleanup | |

| static TObject::EStatusBits | TObject::kNoContextMenu | |

| static TObject::<anonymous> | TObject::kNotDeleted | |

| static TObject::EStatusBits | TObject::kObjInCanvas | |

| static TObject::<anonymous> | TObject::kOverwrite | |

| static TUnfold::ERegMode | kRegModeCurvature | |

| static TUnfold::ERegMode | kRegModeDerivative | |

| static TUnfold::ERegMode | kRegModeMixed | |

| static TUnfold::ERegMode | kRegModeNone | |

| static TUnfold::ERegMode | kRegModeSize | |

| static TObject::<anonymous> | TObject::kSingleKey | |

| static TObject::<anonymous> | TObject::kWriteDelete | |

| static TObject::<anonymous> | TObject::kZombie |

protected:

| TMatrixDSparse* | fA | Input: matrix |

| Double_t | fBiasScale | Input: scale factor for the bias |

| TUnfold::EConstraint | fConstraint | Input: type of constraint to use |

| TArrayI | fHistToX | Input: histogram bins -> matrix indices |

| TMatrixDSparse* | fL | Input: regularisation conditions |

| TUnfold::ERegMode | fRegMode | Input: type of regularisation |

| TArrayD | fSumOverY | Input: sum of all columns |

| Double_t | fTauSquared | Input: regularisation parameter |

| TMatrixDSparse* | fVyy | Input: covariance matrix for y |

| TMatrixD* | fX0 | Input: x0 |

| TArrayI | fXToHist | Input: matrix indices -> histogram bins |

| TMatrixD* | fY | Input: y |

private:

| TMatrixDSparse* | fAx | Result: Ax |

| Double_t | fChi2A | Result: chi**2 contribution from (y-Ax)V(y-Ax) |

| TMatrixDSparse* | fDXDAM[2] | Result: part of derivative dx_k/dA_ij |

| TMatrixDSparse* | fDXDAZ[2] | Result: part of derivative dx_k/dA_ij |

| TMatrixDSparse* | fDXDY | Result: derivative dx/dy |

| TMatrixDSparse* | fDXDtauSquared | Result: derivative dx/dtau |

| TMatrixDSparse* | fE | Result: matrix E |

| TMatrixDSparse* | fEinv | Result: matrix E^(-1) |

| Double_t | fEpsMatrix | machine accuracy for eingenvalue analysis |

| Int_t | fIgnoredBins | number of input bins which are dropped because they have error=0 |

| Double_t | fLXsquared | Result: chi**2 contribution from (x-s*x0)Lsquared(x-s*x0) |

| Int_t | fNdf | Result: number of degrees of freedom |

| Double_t | fRhoAvg | Result: average global correlation |

| Double_t | fRhoMax | Result: maximum global correlation |

| TMatrixDSparse* | fVxx | Result: covariance matrix on x |

| TMatrixDSparse* | fVxxInv | Result: inverse of covariance matrix on x |

| TMatrixDSparse* | fVyyInv | Result: inverse of covariance matrix on y |

| TMatrixD* | fX | Result: x |

Class Charts

Function documentation

const char * GetTUnfoldVersion(void)

void DeleteMatrix(TMatrixD** m)

void DeleteMatrix(TMatrixDSparse** m)

void ClearResults(void)

delete old results (if any) this function is virtual, so derived classes may implement their own method to flag results as non-valid

Double_t DoUnfold(Double_t tau)

main unfolding algorithm. Declared virtual, because other algorithms

could be implemented

Purpose: unfold y -> x

Data members required:

fA: matrix to relate x and y

fY: measured data points

fX0: bias on x

fBiasScale: scale factor for fX0

fVyy: covariance matrix for y

fL: regularisation conditions

fTauSquared: regularisation strength

fConstraint: whether the constraint is applied

Data members modified:

fVyyInv: inverse of input data covariance matrix

fNdf: number of dgerres of freedom

fEinv: inverse of the matrix needed for unfolding calculations

fE: the matrix needed for unfolding calculations

fX: unfolded data points

fDXDY: derivative of x wrt y (for error propagation)

fVxx: error matrix (covariance matrix) on x

fAx: estimate of distribution y from unfolded data

fChi2A: contribution to chi**2 from y-Ax

fChi2L: contribution to chi**2 from L*(x-x0)

fDXDtauSquared: derivative of x wrt tau

fDXDAM[0,1]: matrix parts of derivative x wrt A

fDXDAZ[0,1]: vector parts of derivative x wrt A

fRhoMax: maximum global correlation coefficient

fRhoAvg: average global correlation coefficient

return code:

fRhoMax if(fRhoMax>=1.0) then the unfolding has failed!

TMatrixDSparse * MultiplyMSparseMSparse(const TMatrixDSparse* a, const TMatrixDSparse* b) const

calculate the product of two sparse matrices

a,b: pointers to sparse matrices, where a->GetNcols()==b->GetNrows()

this is a replacement for the call

new TMatrixDSparse(*a,TMatrixDSparse::kMult,*b);

TMatrixDSparse * MultiplyMSparseM(const TMatrixDSparse* a, const TMatrixD* b) const

multiply a Sparse matrix with a non-sparse matrix

a: pointer to sparse matrix

b: pointer to non-sparse matrix

this is a replacement for the call

new TMatrixDSparse(*a,TMatrixDSparse::kMult,*b);

void AddMSparse(TMatrixDSparse* dest, Double_t f, const TMatrixDSparse* src) const

a replacement for

(*dest) += f*(*src)

TString GetOutputBinName(Int_t iBinX) const

given a bin number, return the name of the output bin this method makes more sense for the class TUnfoldDnesity where it gets overwritten

TUnfold(const TH2* hist_A, TUnfold::EHistMap histmap, TUnfold::ERegMode regmode = kRegModeSize, TUnfold::EConstraint constraint = kEConstraintArea)

set up unfolding matrix and initial regularisation scheme

hist_A: matrix that describes the migrations

histmap: mapping of the histogram axes to the unfolding output

regmode: global regularisation mode

constraint: type of constraint to use

data members initialized to something different from zero:

fA: filled from hist_A

fDA: filled from hist_A

fX0: filled from hist_A

fL: filled depending on the regularisation scheme

Treatment of overflow bins

Bins where the unfolding input (Detector level) is in overflow

are used for the efficiency correction. They have to be filled

properly!

Bins where the unfolding output (Generator level) is in overflow

are treated as a part of the generator level distribution.

I.e. the unfolding output could have non-zero overflow bins if the

input matrix does have such bins.

Int_t RegularizeSize(int bin, Double_t scale = 1.)

add regularisation on the size of bin i

bin: bin number

scale: size of the regularisation

return value: number of conditions which have been skipped

modifies data member fL

Int_t RegularizeDerivative(int left_bin, int right_bin, Double_t scale = 1.)

add regularisation on the difference of two bins left_bin: 1st bin right_bin: 2nd bin scale: size of the regularisation return value: number of conditions which have been skipped modifies data member fL

Int_t RegularizeCurvature(int left_bin, int center_bin, int right_bin, Double_t scale_left = 1., Double_t scale_right = 1.)

add regularisation on the curvature through 3 bins (2nd derivative) left_bin: 1st bin center_bin: 2nd bin right_bin: 3rd bin scale_left: scale factor on center-left difference scale_right: scale factor on right-center difference return value: number of conditions which have been skipped modifies data member fL

Int_t RegularizeBins(int start, int step, int nbin, TUnfold::ERegMode regmode)

set regulatisation on a 1-dimensional curve start: first bin step: distance between neighbouring bins nbin: total number of bins regmode: regularisation mode return value: number of errors (i.e. conditions which have been skipped) modifies data member fL

Int_t RegularizeBins2D(int start_bin, int step1, int nbin1, int step2, int nbin2, TUnfold::ERegMode regmode)

set regularisation on a 2-dimensional grid of bins

start: first bin

step1: distance between bins in 1st direction

nbin1: number of bins in 1st direction

step2: distance between bins in 2nd direction

nbin2: number of bins in 2nd direction

return value:

number of errors (i.e. conditions which have been skipped)

modifies data member fL

Double_t DoUnfold(Double_t tau, const TH1* hist_y, Double_t scaleBias = 0.)

Do unfolding of an input histogram tau_reg: regularisation parameter input: input distribution with errors scaleBias: scale factor applied to the bias Data members required: fA, fX0, fL Data members modified: those documented in SetInput() and those documented in DoUnfold(Double_t) Return value: maximum global correlation coefficient NOTE!!! return value >=1.0 means error, and the result is junk Overflow bins of the input distribution are ignored!

Int_t SetInput(const TH1* hist_y, Double_t scaleBias = 0., Double_t oneOverZeroError = 0., const TH2* hist_vyy = 0, const TH2* hist_vyy_inv = 0)

Define the input data for subsequent calls to DoUnfold(Double_t) input: input distribution with errors scaleBias: scale factor applied to the bias oneOverZeroError: for bins with zero error, this number defines 1/error. hist_vyy: if non-zero, defines the data covariance matrix otherwise it is calculated from the data errors hist_vyy_inv: if non-zero and if hist_vyy is set, defines the inverse of the data covariance matrix Return value: number of bins with bad error +10000*number of unconstrained output bins Note: return values>=10000 are fatal errors, for the given input, the unfolding can not be done! Data members modified: fY, fVyy, , fBiasScale Data members cleared fVyyInv, fNdf + see ClearResults

Double_t DoUnfold(Double_t tau)

Unfold with given value of regularisation parameter tau

tau: new tau parameter

required data members:

fA: matrix to relate x and y

fY: measured data points

fX0: bias on x

fBiasScale: scale factor for fX0

fV: inverse of covariance matrix for y

fL: regularisation conditions

modified data members:

fTauSquared and those documented in DoUnfold(void)

Int_t ScanLcurve(Int_t nPoint, Double_t tauMin, Double_t tauMax, TGraph** lCurve, TSpline** logTauX = 0, TSpline** logTauY = 0)

scan the L curve

nPoint: number of points on the resulting curve

tauMin: smallest tau value to study

tauMax: largest tau value to study

lCurve: the L curve as graph

logTauX: output spline of x-coordinates vs tau for the L curve

logTauY: output spline of y-coordinates vs tau for the L curve

return value: the coordinate number (0..nPoint-1) with the "best" choice

of tau

void GetNormalisationVector(TH1* s, const Int_t* binMap = 0) const

get vector of normalisation factors

out: output histogram

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

void GetBias(TH1* bias, const Int_t* binMap = 0) const

get bias distribution, possibly with bin remapping

out: output histogram

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

void GetFoldedOutput(TH1* folded, const Int_t* binMap = 0) const

get unfolding result, folded back trough the matrix

out: output histogram

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

void GetProbabilityMatrix(TH2* A, TUnfold::EHistMap histmap) const

retreive matrix of probabilities

histmap: on which axis to arrange the input/output vector

A: histogram to store the probability matrix

void GetInput(TH1* inputData, const Int_t* binMap = 0) const

retreive input distribution

out: output histogram

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

void GetInputInverseEmatrix(TH2* ematrix)

calculate the inverse of the contribution to the error matrix corresponding to the input data

void GetLsquared(TH2* lsquared) const

retreive matrix of regularisation conditions squared out: prebooked matrix

void GetOutput(TH1* output, const Int_t* binMap = 0) const

get output distribution, cumulated over several bins

output: output histogram

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

void ErrorMatrixToHist(TH2* ematrix, const TMatrixDSparse* emat, const Int_t* binMap, Bool_t doClear) const

get an error matrix, cumulated over several bins

ematrix: output error matrix histogram

emat: error matrix

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

void GetEmatrix(TH2* ematrix, const Int_t* binMap = 0) const

get output error matrix, cumulated over several bins

ematrix: output error matrix histogram

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

void GetRhoIJ(TH2* rhoij, const Int_t* binMap = 0) const

get correlation coefficient matrix, cumulated over several bins

rhoij: correlation coefficient matrix histogram

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

Double_t GetRhoI(TH1* rhoi, const Int_t* binMap = 0, TH2* invEmat = 0) const

get global correlation coefficients with arbitrary min map

rhoi: global correlation histogram

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

return value: maximum global correlation

Double_t GetRhoIFromMatrix(TH1* rhoi, const TMatrixDSparse* eOrig, const Int_t* binMap, TH2* invEmat) const

get global correlation coefficients with arbitrary min map

rhoi: global correlation histogram

emat: error matrix

binMap: for each bin of the original output distribution

specify the destination bin. A value of -1 means that the bin

is discarded. 0 means underflow bin, 1 first bin, ...

binMap[0] : destination of underflow bin

binMap[1] : destination of first bin

return value: maximum global correlation

TMatrixDSparse * MultiplyMSparseTranspMSparse(const TMatrixDSparse* a, const TMatrixDSparse* b) const

TMatrixDSparse * InvertMSparseSymmPos(const TMatrixDSparse* A, Int_t* rank) const

TMatrixDSparse * CreateSparseMatrix(Int_t nrow, Int_t ncol, Int_t nele, Int_t* row, Int_t* col, Double_t* data) const

Bool_t AddRegularisationCondition(Int_t i0, Double_t f0, Int_t i1 = -1, Double_t f1 = 0., Int_t i2 = -1, Double_t f2 = 0.)

Bool_t AddRegularisationCondition(Int_t nEle, const Int_t* indices, const Double_t* rowData)