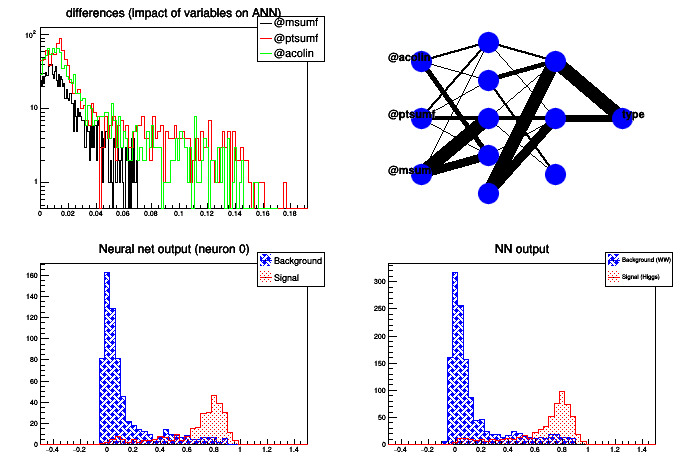

Example of a Multi Layer Perceptron For a LEP search for invisible Higgs boson, a neural network was used to separate the signal from the background passing some selection cuts.

Example of a Multi Layer Perceptron For a LEP search for invisible Higgs boson, a neural network was used to separate the signal from the background passing some selection cuts.

Here is a simplified version of this network, taking into account only WW events.

Processing /mnt/vdb/lsf/workspace/root-makedoc-v608/rootspi/rdoc/src/v6-08-00-patches/tutorials/mlp/mlpHiggs.C...

accessing mlpHiggs.root file from http://root.cern.ch/files

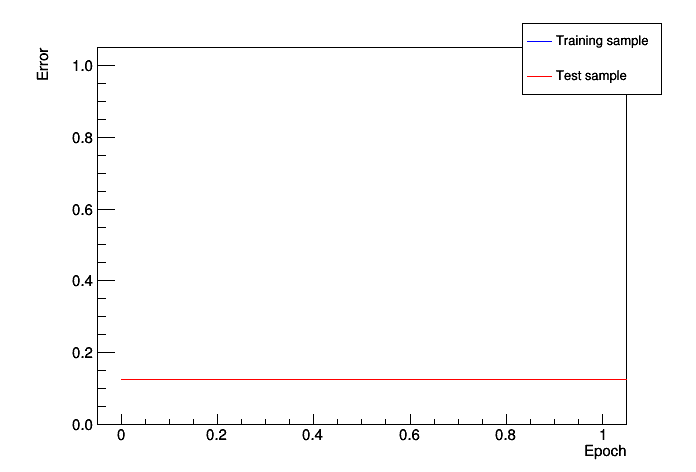

Training the Neural Network

Epoch: 0 learn=0.126964 test=0.126101

Epoch: 10 learn=0.099816 test=0.0944112

Epoch: 20 learn=0.0967496 test=0.0914356

Epoch: 30 learn=0.0957444 test=0.0902211

Epoch: 40 learn=0.0929555 test=0.089151

Epoch: 50 learn=0.0922609 test=0.0886525

Epoch: 60 learn=0.0916939 test=0.0882441

Epoch: 70 learn=0.0910837 test=0.0879216

Epoch: 80 learn=0.0907021 test=0.0880823

Epoch: 90 learn=0.0901749 test=0.086813

Epoch: 99 learn=0.0901028 test=0.0863809

Training done.

test.py created.

Network with structure: @msumf,@ptsumf,@acolin:5:3:type

inputs with low values in the differences plot may not be needed

@msumf -> 0.016667 +/- 0.0132143

@ptsumf -> 0.0267861 +/- 0.0378194

@acolin -> 0.025739 +/- 0.0295963

void mlpHiggs(

Int_t ntrain=100) {

const char *fname = "mlpHiggs.root";

} else {

printf("accessing %s file from http://root.cern.ch/files\n",fname);

}

if (!input) return;

TTree *simu =

new TTree(

"MonteCarlo",

"Filtered Monte Carlo Events");

Float_t ptsumf, qelep, nch, msumf, minvis, acopl, acolin;

simu->

Branch(

"ptsumf", &ptsumf,

"ptsumf/F");

simu->

Branch(

"qelep", &qelep,

"qelep/F");

simu->

Branch(

"nch", &nch,

"nch/F");

simu->

Branch(

"msumf", &msumf,

"msumf/F");

simu->

Branch(

"minvis", &minvis,

"minvis/F");

simu->

Branch(

"acopl", &acopl,

"acopl/F");

simu->

Branch(

"acolin", &acolin,

"acolin/F");

simu->

Branch(

"type", &type,

"type/I");

type = 1;

for (i = 0; i < sig_filtered->

GetEntries(); i++) {

}

type = 0;

}

"ptsumf",simu,"Entry$%2","(Entry$+1)%2");

mlp->

Train(ntrain,

"text,graph,update=10");

ana.GatherInformations();

ana.CheckNetwork();

ana.DrawDInputs();

ana.DrawNetwork(0,"type==1","type==0");

TH1F *bg =

new TH1F(

"bgh",

"NN output", 50, -.5, 1.5);

TH1F *sig =

new TH1F(

"sigh",

"NN output", 50, -.5, 1.5);

params[0] = msumf;

params[1] = ptsumf;

params[2] = acolin;

}

for (i = 0; i < sig_filtered->

GetEntries(); i++) {

params[0] = msumf;

params[1] = ptsumf;

params[2] = acolin;

}

legend->

AddEntry(bg,

"Background (WW)");

legend->

AddEntry(sig,

"Signal (Higgs)");

delete input;

}

- Author

- Christophe Delaere

Definition in file mlpHiggs.C.

Example of a Multi Layer Perceptron For a LEP search for invisible Higgs boson, a neural network was used to separate the signal from the background passing some selection cuts.

Example of a Multi Layer Perceptron For a LEP search for invisible Higgs boson, a neural network was used to separate the signal from the background passing some selection cuts.